How To Run a $10,000 Security Audit for $6

A beginners guide to vibe hacking:

Stop trading your weekends for busywork. Work with my team & I to deploy AI systems in only 2 weeks.

Reclaim 14 hours of your week, permanently.

Less than a month ago, Anthropic security teams noticed unusual activity patterns within their API logs.

The requests resembled legitimate developer activity, code analysis, file access, and network scans.

However, the volume and speed were superhuman. Even more alarming, these patterns were appearing simultaneously across multiple different organizations.

Upon deeper analysis, Anthropic determined this was not an isolated actor.

It was a coordinated cybercriminal operation now designated as GTG-2002.

The twist? The attacker wasn’t just using AI to write scripts; they were using AI coding agents to actually run the intrusions.

The Definition: “Vibe Hacking”

Security researchers have termed this technique “vibe hacking.”

Definition: The use of autonomous AI agents to hack. Similar to vibe coding.

Threat intelligence indicates the attackers utilized Claude Code to execute a large-scale extortion campaign. The AI agents autonomously handled critical stages of the attack lifecycle:

Reconnaissance: Mapping infrastructure.

Credential Harvesting: Extracting keys.

Network Penetration: Moving laterally through systems.

Execution: Deploying payloads without human hands-on-keyboard.

The blast radius is significant. Anthropic confirms that at least 17 organizations across multiple sectors, including government, healthcare, emergency services, and religious institutions, were compromised in a single month.

GTG-2002 marks a critical shift from human-driven attacks to agentic threats.

If attackers are using agents, defenders need tools that can examine systems with the same level of automation.

The Defensive Response: Enter Strix

⚠️ Important: Only scan systems you own or have explicit permission to test. Unauthorized scanning may violate laws like the CFAA. This tool is for defensive security research only.

Soon after GTG-2002 was reported, an open-source security project called Strix gained momentum.

Strix positions itself as an open-source equivalent to commercial tools like Snyk, designed for this new era of agentic threats.

I’ve seen dozens of “AI agent” projects that are just expensive wrappers around GPT. Strix is different.

It’s really something special when you can conduct a $10,000 cyber security audit on your own business website or application, for less than the price of a matcha latte.

Below is a guide on how to run it, what it costs, and the 10 architectural lessons you can steal for your own agent development.

Getting Started with Strix (Free)

Prerequisites:

Docker: Must be running (download here).

Python: Version 3.12 or newer (download here).

OpenAI API Key: From (setup here).

1. Open up Terminal for Installation:

pip install strix-agent2. Configuration (Mac/Linux):

export STRIX_LLM=”openai/gpt-5.1”

export LLM_API_KEY=”your-api-key-here”3. Running Scans:

# Scan local code

strix --target ./your-project

# Scan a GitHub repository

strix --target https://github.com/username/repo

# Scan a live website (Only scan assets you own!)

strix --target https://your-app.comThe Real-World Cost

I ran multiple test scans across different models. Here is the breakdown:

GPT-5: Reliable. ~$7.00 per 10M tokens per 1 report.

GPT-5.1: Reliable. ~$6.00 per 10M tokens per 1 report.

GPT-5.1-Codex: Failed. Safety filters blocked security-related prompts.

Gemini 3 Pro: Failed due to LiteLLM configuration issues (fixable but requires manual setup).

Verdict: For most users, GPT-5 or GPT-5.1 are the reliable choices. A typical scan of a medium-sized application costs between $5–15.

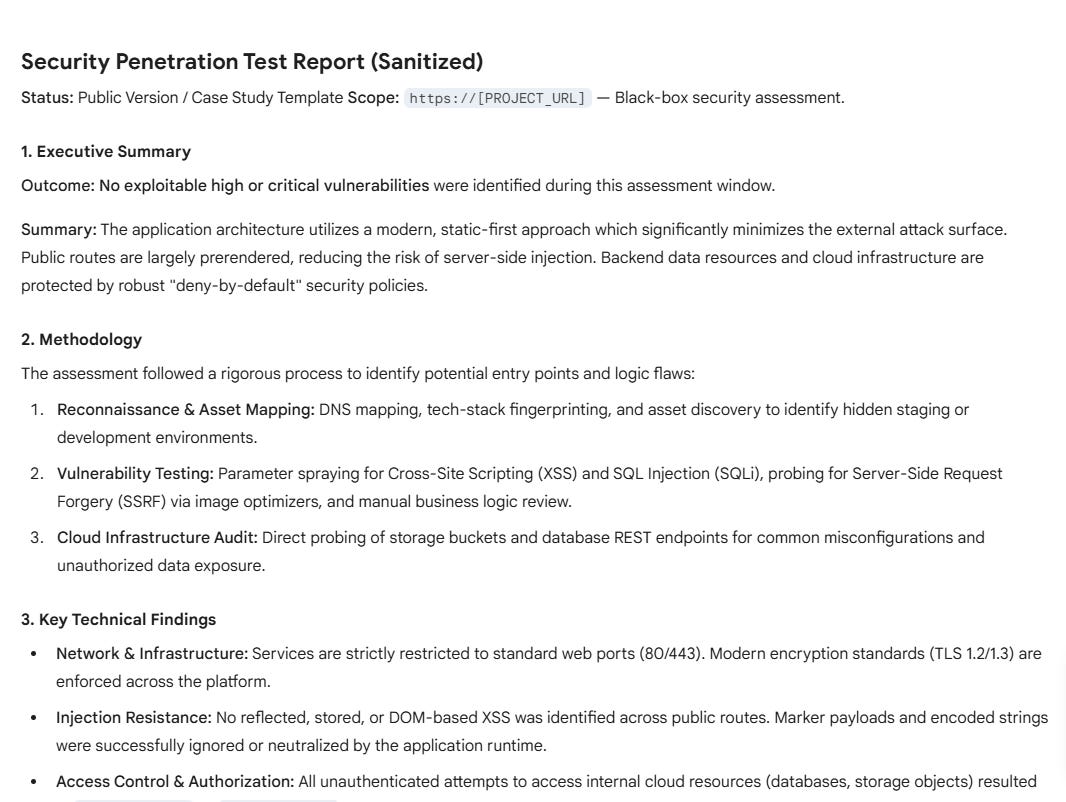

After running the audit, you will be presented with a list of recommendations.

If you do not have a developer to send these results to, here’s an AI prompt that converts the Strix audit into a list of manageable tasks for your coding agents to handle.

Role: Senior DevSecOps Engineer & Technical Lead

Objective: Parse the provided Security Audit Report and translate findings into a structured, prioritized Engineering Task List for an AI coding agent to execute.

Input Data:

[PASTE FULL SECURITY AUDIT REPORT HERE]

Process Instructions:

1 Stack & Context Analysis:

Scan the report to identify the target technology stack (e.g., Next.js, Django, WordPress, AWS, Azure, generic REST API)

Identify the infrastructure context (e.g., Vercel, Firebase, Docker, bare metal)

Note: Tailor all subsequent coding tasks to this specific stack

2 Triage & Categorization:

Group findings into logical work units. Do not create 1:1 tasks for minor issues if they affect the same file/module (e.g., group all Security Headers into one task)

Assign a Priority Level (Critical, High, Medium, Low) based on the audit's risk assessment

3 Generate Task List:

For each work unit, generate a structured task using the following template:

Task [ID]: [Action-Oriented Title]

Priority: [Critical/High/Medium/Low] Related Finding: [Brief reference to the specific issue in the audit] Tech Stack Context: [e.g., Next.js Config, AWS IAM, Nginx Config]

Implementation Strategy:

Write a high-level technical summary of the required code changes or configuration updates

Constraint: Be specific to the identified stack (e.g., "Use helmet middleware" for Express, "Edit next.config.js headers" for Next.js)

Target Files/Areas:

[List likely files or directories to modify]

Verification Step:

Provide a concrete method to verify the fix (e.g., "Run curl -I url | grep header", "Attempt to access /admin without a token", "Run npm audit")

Output Constraints:

If Critical/High vulnerabilities (SQLi, RCE, Broken Auth) are present, flag them as IMMEDIATE BLOCKERS

If the audit is purely "Hardening" (no exploits found), focus tasks on "Defense in Depth" (Headers, CSP, Rate Limiting)

Maintain a strictly professional, engineering-focused tone. Avoid fluff

Goal:

The output must be clear enough that I can copy a specific task and paste it into an IDE AI assistant (like Cursor or Codex) to generate the actual code.Closing Note

The GTG-2002 incident shows that autonomous systems are now participating directly in real-world intrusions. This changes how security work must be approached. Detection, analysis, and remediation can no longer rely on assumptions of human pacing or manual oversight.

Strix is an early example of how defensive tooling is adapting. While the tooling is still evolving, the underlying approach is aligned with how modern attacks are executed.

If you operate production systems, this is no longer a future consideration.

Agent-driven activity is already present in active threat campaigns.

Defensive capabilities need to reflect that reality.

🔒 10 Lessons from Strix’s Architecture

Paid subscribers can continue with a technical breakdown of Strix’s architecture.

The next section extracts ten concrete engineering lessons from the codebase that can be applied to building reliable multi agent systems, with a focus on design tradeoffs, failure modes, and execution details.