What Leaders Said About AI at Davos

Davos 2026: The Coldest Tech Conference.

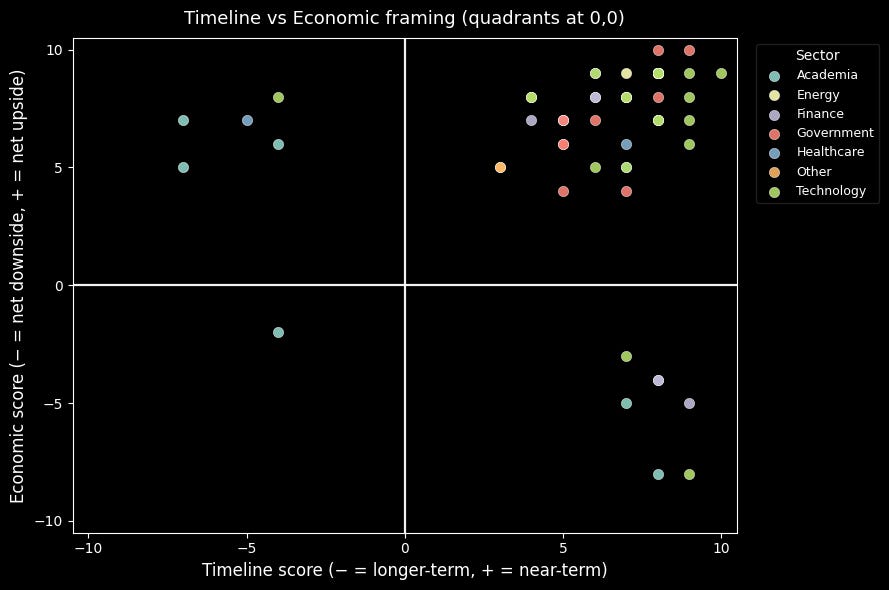

After analyzing 254 sessions and identifying 95 speakers who meaningfully addressed artificial intelligence, a clear pattern emerged, one that exposes the fault lines in the most contentious debate shaping AI’s future.

Here’s what global leaders actually said about AI, and what their silence reveals.

The Shared Reality:

AI is the New General-Purpose Utility: AI has graduated from just a tech product to a fundamental economic infrastructure, equivalent to the power grid or the internet. Leaders across tech, finance, and government now view AI as the structural backbone of national competitiveness. If you don't own the infrastructure, you don't own your economic future.

(~26% of speakers directly addressed this)

The Bottleneck is Physical, Not Virtual: The AI Race has shifted from software labs to the physical world. The limiting factors are no longer better algorithms, but energy, silicon, and data centers. The next era of global power will be defined by whoever can secure the massive power generation and supply chains required to keep the processors running.

(~35% of speakers directly addressed this)

The Timeline is Now: The luxury of treating AI as a speculative, long-term risk has vanished. Across every sector, the consensus is that AI is a present-tense economic force already dictating capital investment, enterprise strategy, and labor market shifts. The window for "wait and see" is officially closed.

(~25% of speakers directly addressed this)

Productivity is Growing, but the Gap is Widening: While AI is guaranteed to "expand the pie," it is creating a K-shaped recovery. Advanced economies and tech-integrated firms are pulling away from the rest of the world. Without aggressive policy intervention, the productivity gains of AI will naturally accelerate global and domestic inequality rather than solve it.

(~9% of speakers directly addressed this)

Governance is Non-Negotiable: The "Wild West" era of AI development is over. Even the most aggressive tech optimists now concede that human-in-the-loop oversight and regulatory guardrails are inevitable. The debate has shifted from whether to regulate to how to regulate without stifling the speed of national growth.

(~14% of speakers directly addressed this)

Where Agreement Breaks Down:

While these points formed a shared baseline, they did not resolve underlying tradeoffs. Agreement on immediacy intensified disagreement on execution. Once AI was treated as present infrastructure rather than future risk, conflicts emerged over speed, control, and acceptable disruption.

The data reflects this shift. Speakers clustered around two priorities: accelerating deployment and preserving system stability. Both camps accept AI’s transformative role in the economy; they diverge on timing, safeguards, and acceptable cost.

Most leaders in tech and finance view AI as an imminent growth engine, emphasizing a high-speed race to scale for productivity and national competitiveness.

However, a critical minority of academics and industry figures warns that this same rapid timeline could lead to systemic shocks, such as job displacement and deepened inequality.

Ultimately, the data shows a total collapse of the "long-term" perspective; everyone agrees the impact is happening now, leaving a fundamental tension between those pursuing rapid economic upside and those calling for urgent governance to mitigate social disruption.

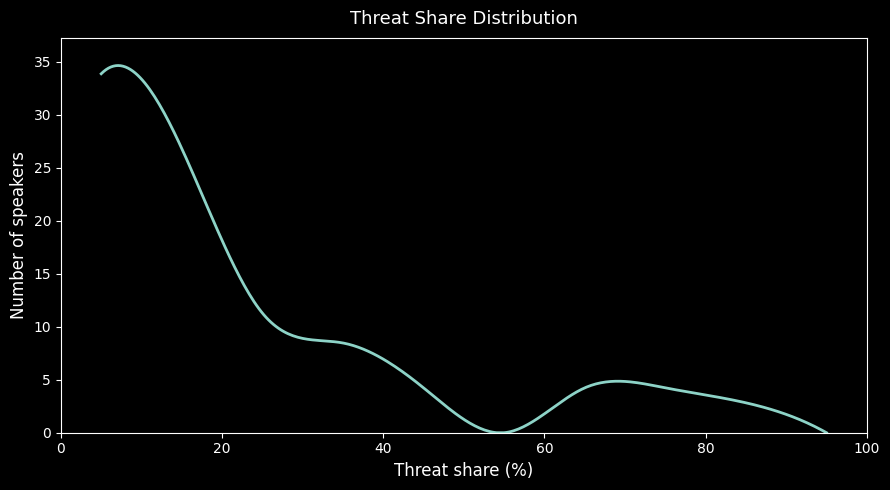

The prevailing economic narrative regarding AI is overwhelmingly optimistic, characterized by a "threat share" that remains peripheral for the majority of stakeholders.

Most speakers, particularly those in technology and government, focus almost exclusively on growth and innovation, with risks accounting for less than 20% of their discourse.

This creates a polarized landscape where a balanced middle ground is nearly non-existent; the conversation is split between a dominant group of "accelerators" and a small, distinct minority, primarily academics and researchers, who foreground systemic dangers like job displacement and inequality.

Conclusively, the distribution reveals that concern is tied to institutional roles: those building and deploying AI prioritize speed and opportunity, while those tasked with studying its long-term social consequences provide a persistent, though outnumbered, warning of volatility.

The Breakpoints:

1. Open Source vs. Closed Systems

The debate over model access creates a direct conflict between two safety theories. Yann LeCun (Meta) argues that restricting models leads to regulatory capture and that safety requires the distributed scrutiny of open development.

Conversely, Yoshua Bengio (Mila) warns that open models enable misuse at scale by bad actors. This rift dictates whether AI power concentrates within a few Western corporations or diffuses globally, forcing every regulatory framework to choose one of these two mutually exclusive paths.

2. Bubble or Buildout

Estimates of AI’s economic value vary wildly among those funding it. Larry Fink (BlackRock) and David Solomon (Goldman Sachs) frame massive infrastructure spending as a rational response to inevitable demand.

Fink stated, “I sincerely believe there is no bubble in the AI space.”

On the opposite end, Bret Taylor (Sierra, OpenAI Board) acknowledged AI is “probably” a bubble, though he argued “you can’t get innovation without that kind of messy competition.” Satya Nadella (Microsoft) offered the most nuanced position: the market is only in bubble territory if productivity gains remain captured by tech firms rather than diffusing across the broader economy.

3. Human Augmentation vs. Replacement

Enterprises and developers disagree on the fundamental role of the worker. Julie Sweet (Accenture) advocates for “human-in-the-lead” models that prioritize workforce continuity.

In contrast, Dario Amodei (Anthropic) predicts the wholesale replacement of cognitive labor within years. This creates two distinct realities for policymakers: one that manages a standard workforce transition and another that requires a total reimagining of the social contract to address structural obsolescence.

Strategic Absences:

Sam Altman (OpenAI CEO): Did not attend Davos 2026—a striking absence given OpenAI’s centrality to AI discourse. He was represented by CFO Sarah Friar and Chief Global Affairs Officer Chris Lehane. This absence coincided with reports of organizational tension and likely reflects strategic caution regarding public positioning during heightened regulatory scrutiny.

Sundar Pichai (Google/Alphabet CEO): Showed no evidence of attendance, leaving Google DeepMind’s Hassabis and COO Ibrahim as the company’s AI voices. This represents a notable retreat from Pichai’s previous Davos presence and public-facing diplomacy.

Tim Cook (Apple CEO): Continued Apple’s pattern of Davos non-participation. With Apple’s AI strategy remaining opaque relative to competitors, Cook’s absence reinforced the company’s preference for controlled, internal announcements over open forum dialogue.

Mark Zuckerberg (Meta CEO): Did not attend despite Meta’s massive pivot toward AI infrastructure. Internal shifts and public critiques regarding the company’s LLM focus likely made a high-profile appearance at the forum unappealing.

The Extremes:

At one pole, Elon Musk and David Solomon articulated acceleration-focused views with minimal emphasis on downside risk.

At the opposite pole, Yuval Noah Harari and Yoshua Bengio centered systemic instability, misuse, and social consequences as primary concerns.

These positions were not representative of the median speaker, but they define the outer bounds of the debate.

The Overall Picture:

The 2026 World Economic Forum data highlights a world that agrees on AI’s immediacy and infrastructural importance, yet remains deeply conflicted over acceptable risk and appropriate speed.

While there is consensus on five core pillars, AI as a utility, physical bottlenecks, timeline urgency, widening gaps, and governance necessity, this shared baseline actually sharpens the friction between sectors.

Builders continue to accelerate, academics prioritize caution, and financiers focus on calculation, while the most powerful voices often choose a strategic withdrawal from the public debate.

The analysis identifies four critical voids where substantive discussion was absent:

Concrete mechanisms to prevent a K-shaped recovery.

Specific governance frameworks designed to balance stability with speed.

International coordination regarding development standards.

Distribution models for productivity gains beyond shareholder value.

The data suggests that while global leaders now use a common vocabulary to describe AI’s importance, they remain far apart on the actual destination.

The primary tension emerging from Davos is whether the people building the infrastructure, those studying systemic risks, and those experiencing labor market shifts are operating in compatible realities or on a collision course.

Methodology Note: To limit my own bias, I used Gemini 3 Thinking to analyze these transcripts. I set the model’s temperature to 0.3, a low-variance setting that prioritizes factual consistency and grounded data over creative interpretation.

I used the following prompt for the research:

You are an expert analyst evaluating speaker positions on AI based on their Davos 2026 transcript. Your task is to assess two dimensions using contextual understanding, semantic analysis, and sentiment scoring—not keyword counting. Input is the full transcript of the speaker’s remarks on AI. Output is two scores with detailed reasoning. Dimension 1 is AI Timeline Urgency on a scale from -10 (AI transformation is distant or slow) to +10 (AI transformation is imminent or urgent). Evaluate the speaker’s temporal framing by identifying explicit and implicit timeline statements, confidence level versus hedging, whether they discuss capability arrival versus deployment, and how much emphasis is placed on urgency. High urgency includes predictions of AGI within 1–2 years, present-tense framing of major capabilities, calls for immediate action, dismissal of longer timelines as naive, and language suggesting danger in hesitation. Moderate urgency includes impacts within 2–5 years with some balance or uncertainty. Neutral avoids specific timelines and uses conditional language. Moderate patience emphasizes AI being early or overhyped with 5–10 year timelines. Distant or skeptical positions argue current paradigms cannot achieve transformative impact and place breakthroughs decades away. Dimension 2 is Economic Impact Framing on a scale from -10 (AI as severe economic or labor threat) to +10 (AI as major economic opportunity). Assess how the speaker frames employment, productivity, inequality, and growth by analyzing sentiment and emphasis. Severe threat focuses on mass unemployment, catastrophic language, and loss framing with little upside. Moderate threat acknowledges displacement but sees it as manageable with mitigation. Balanced gives equal weight to risks and opportunities. Moderate opportunity emphasizes productivity and new industries while acknowledging disruption. Major opportunity frames AI as an unprecedented boom with minimal concern for displacement. Consider sarcasm, quotations of others, conditional statements, hedging language, tone, mitigation strategies, emphasis balance, and whether the speaker’s position evolves during the discussion. Output must be valid JSON containing speaker_name, timeline_urgency_score, economic_framing_score, detailed timeline_analysis with score justification, key temporal quotes, confidence level, and dominant narrative, detailed economic_analysis with score justification, threat and opportunity quotes, sentiment breakdown percentages, dominant narrative, and an overall_assessment summarizing the speaker’s position. Critical instructions are to read the entire transcript, understand context, weight by emphasis, detect sarcasm and quotation, score the actual position, use the full -10 to +10 range, justify with quoted evidence, and remain consistent across transcripts.

Brillaint breakdown of how the "urgency consensus" actually deepens the rift between builders and academics. That shift from "long-term risk" to "present infrastructure" basically forces everyone to pick a side on execution speed, and the fact that ~35% focused on physical bottlenecks while only 9% addressed inequality is telling. I've seen this play out in smaller orgs too where everyone agrees AI is now but can't align on timlines or safeguards.