Learn To Prompt (2025 Guide)

Better Questions, Better Prompts

This guide is useful for anyone who prompts, from beginners to experts.

TABLE OF CONTENTS

PREFACE: PROMPT OR PERISH

Into the Fog

The Mental Crash

One Prompt to Survive

From Cockpit to Keyboard

CHAPTER ONE: QUESTION THE QUESTION

Building the Habit of Inquiry

Defining a New Cognitive Skill

Asking Questions That Work

Using Inquiry Modes Effectively

Measuring the Impact of Better Questions

Asking With Intention

Achieving Precision in Modular Systems

CHAPTER TWO: THE MODELS JUST WANT TO LEARN

Using the RTF Prompt Framework

Understanding Tokens and Context Windows

Applying Core Action Patterns

Fixing Failures in Output

CHAPTER THREE: HOW TO PROMPT ANYTHING

Prompting for Text Generation

Prompting for Image Generation

Prompting for Video Generation

Prompting Custom GPTs

Writing System Prompts

Exploring Careers in Prompt Engineering

WHATS NEXT

The Conversation So Far (3/4)

“The limits of my language, mean the limits of my world.”

- Ludwig Wittgenstein

July 04 2020.

Steinbach Airport (CKK7) , Manitoba Canada

PROMPT OR PERISH:

The air smelt of campfire smoke, airplane fuel, and Tim Hortons coffee.

A mix of danger, comfort, and routine. An olfactory cocktail for ambition.

Today was the day of my 300 mile flight. A rite of passage for any pilot moving from “just for fun” to a paid professional.

To earn a commercial pilot license in Canada, you require one of these flights.

I checked the maps. The weather. The plane. Our plan seemed solid. Clouds were forecast at 8,000 feet. We’d scud run at 6,500 until skies open up.

…

We were three 17-year-olds in a rented Piper PA28.

The airplane equivalent of a 1999 Honda Civic.

The only thing missing were the rattling subwoofers.

We turned on our cameras, completed our checklist and fired up the engines.

“Steinbach South Traffic, Foxtrot Echo India November Rolling Runway 35”

We lifted off, onto our boys trip to Moose Jaw, ETA 3:26PM.

Chasing a dream of clearer skies.

In the cockpit was myself (the pilot in command) and my two buddies, both unlicensed student pilots along for the ride.

I was cleared for visual flight only.

This meant both myself and the plane were not equipped or approved to fly in clouds.

Flying blind with no training is one of the deadliest mistakes in general aviation. The stats are unforgiving.

The Federal Aviation Administration and the AOPA Air Safety Institute find the fatality rate for pilots in these scenarios is over 80% .

Climb-Out

The Cherokee climbed smoothly. The engine hummed.

Everything looked textbook.

Outside, overcast clouds floated harmlessly above us.

I kept my eyes on my usual scan, checking the gauges, maintaining my airspeed, staying on course. A reassuring rhythm.

The boys were laughing about something. I don’t remember what.

However, I do recall how serene those life moments with friends can be.

Sunlight peeking through the gaps of the cloud.

That late-morning prairie glow.

For a while, it felt like freedom.

5 Mile Visibility…

We leveled at 6,500 feet. The weather held as expected.

But something felt off. The clouds ahead looked closer than they should be.

Strange. I wasn’t climbing.

They were supposed to be at 8,000 feet.

4 Mile Visibility…

I brushed it off. Probably an illusion. Worst case, I’d descend.

From the backseat, my buddy grinned.

“Hey Max, you still owe me seven bucks for that Timmy’s breakfast.”

3 Mile Visibility…

The horizon vanishes, consumed by an insidious white fog.

I snapped, “Dude, not now. We just lost visual.”

2 Mile Visibility…

As the windshield turns blank, everyone goes quiet.

What’s left is our reflections.

1 Mile Visibility…

The impending doom sets in.

My hands are slipping off the controls.

I leaned left.

A memory of earth flashed past the side window.

…

Then nothing.

No ground. No sky. No horizon.

…

My imagination conjured headlines and news reports.

Visions of our sorrowed mothers.

And silence.

Live Footage From The Cockpit

In moments like these, something else kicks in.

The situation presented itself as a do or quite literally die.

The quality of every action and question had to be perfect.

What no one prepares you for is the mental part, the fog inside your own head.

The disorientation is guaranteed. Reorientation is uncertain.

Doubt creeps in and logic slips away.

With my waning fuel, limited energy, and a wall of white consuming the sky, there was no tolerance for error.

My saving grace was a simple mnemonic from flight school.

A three word phrase that became my tool for survival.

It cleared my mind, forcing me to isolate my attention and land safely.

It goes:

AVIATE

Fly the plane. That comes first.Handle the immediate, highest-priority crisis first.

NAVIGATE

Figure out where you are and where you're headed.Once stable, orient yourself and form a plan.

COMMUNICATE

Then, and only then, speak.With a plan in hand, purposefully engage others.

The mnemonic collapsed a thousand panicked questions into a single, focused prompt.

That crisis taught me that the most dangerous fog is not in the sky, but in the mind. It is the fog of ambiguity.

One clear lesson:

Better questions. Better prompts. Better results.

Today, I apply this same principle of structured thinking to my work in prompting.

Whether consulting enterprise leaders or interacting with new technology, the core challenge is the same.

The quality of our questions and the precision of our language determine if we find clarity or remain lost in the fog.

Let’s begin.

Question The Question

Chapter One

A single, well-aimed question can move more mass than a thousand answers.

When Tesla reimagined the electric grid, when Einstein ripped apart Newtonian spacetime, when a junior engineer spots the flaw before launch—each inflection point began with a sentence ending in a question mark.

Consider one such moment that nearly ended civilization itself.

On September 26, 1983, Lieutenant Colonel Stanislav Petrov sat at his monitoring station in Serpukhov-15, a townlet right outside Moscow.

Soviet Union’s early-warning system (OKO) detected what appeared to be 5 incoming U.S. ICBMs.

Protocol demanded he immediately report the attack, triggering massive nuclear retaliation causing mutual assured destruction.

But Petrov paused. He asked himself:

Why only five missiles? Why would America launch such a small first strike?

Something felt wrong.

He reported it as a false alarm.

He was right. A rare alignment of sunlight and satellites had created phantom missiles on the radar. His questions—his refusal to accept the first answer—prevented nuclear war.

In moments of crisis, the person who stops to ask, “But does this make sense?” can save the world.

Petrov worked with partial data, immense time pressure, and the highest possible consequences. He didn’t have perfect knowledge; he had one chance to ask the right question.

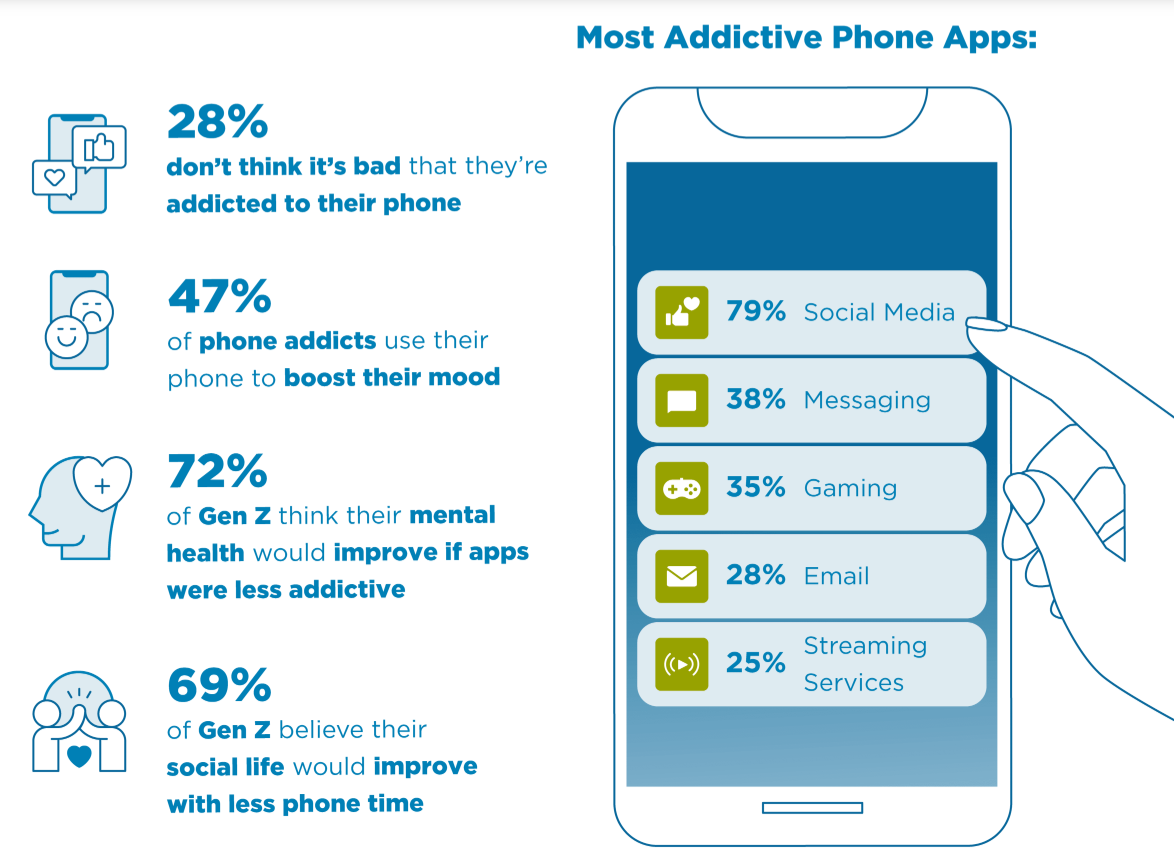

Most of us aren't facing nuclear alerts. Instead, we're drowning in a different kind of overwhelm, a world flooding us with answers.

A tsunami of tales told by recommendation algorithms.

(still) In this abundance of information, we've forgotten the one tool that truly guides us: the question.

The information we consume shapes our beliefs, and those beliefs forge the very questions we ask.

This cycle defines what we notice, what we create, and how we think.

With the rise of AI, asking a great question has continued to be our most valuable skill.

Yet, just as it becomes critical, we're losing the art of asking.

Our creativity is being outsourced to machines, and our conversations are paying the price.

Center for Humane Technology: Find more from Tristan

The emergence of generative AI tools has transformed "asking good questions", once considered a soft skill, into something richer.

The stakes have never been higher, yet we're losing the very capacity that makes us most human.

The mass desocialization caused by attention-hijacking AI algorithms have reduced the collective agency of humanity.

Many people are losing the art of communication, particularly the delicate craft of asking questions that unlock understanding.

We find ourselves trapped in patterns of inquiry that feel increasingly mechanical, divorced from genuine human curiosity.

The questions we encounter today follow disturbingly predictable patterns:

→ Overly Scripted: They lack genuine curiosity, feeling robotic and pre-planned, failing to adapt to the nuances of the moment—as if we've outsourced our wonder to algorithmic templates. ‘Sound Fair Enough?’

→ Closed to Reflection: They seek simple 'yes' or 'no' answers, leaving no room for deeper thought, nuance, or exploratory discussion—mirroring the binary logic of machines. ‘Its going well today?’

→ Emotionally Indifferent: Asked without regard for context, timing, or the psychological state of the recipient, they feel intrusive or dismissive—a symptom of our growing disconnection from emotional intelligence. ‘Why haven’t you fixed this yet?’

→ Attached to Preconceived Outcomes: Designed to subtly manipulate toward a specific answer or action, they prioritize control over discovery—reflecting our anxiety-driven need for certainty in an uncertain world. ‘Don’t you think this is the best option?’

→ Front-loaded and Forceful: They bombard the recipient or AI with excessive information or demand immediate, complex responses without allowing for cognitive processing—as if urgency could substitute for depth. ‘Considering everything we’ve discussed over the past three months, all the context in the documents I sent, and the urgency of the deadline, what’s the exact next move that will fix this?’

These mechanized patterns of inquiry shut down conversation, create psychological friction, erode trust, and lead to catastrophic outcomes whether in a critical flight situation, a high-stakes business negotiation, or an interaction with AI systems that mirror back our own limitations.

Every project budget should list an invisible line item: Unasked Questions. They are the silent tax on velocity.

So, if a question once saved the world, a better question might now build the one we want.

Let’s learn to ask them.

A NEW KIND OF SKILL

The emergence of generative AI tools has transformed "asking good questions"—once considered a soft skill—into something richer: a Semi Skill.

Semi Skill = Soft Skill + Hard Skill

These are abilities that require both the empathy and nuance of human interaction and the structured thinking and precision of technical execution.

Semi Skills Definition (First Principles): A semi skill is a multi-dimensional ability that requires both the human-centered intuition of soft skills and the systematic rigor of hard skills—blending emotional resonance with technical execution.

HOW TO ASK HIGH AGENCY QUESTIONS

High-agency questions possess three distinct characteristics that separate them from the noise of everyday inquiry.

Complexity Compression A useful question transforms an overwhelming problem into something testable or explorable. The question becomes a lens that focuses scattered information into actionable insight.

Output Generation High agency questions create psychological momentum. They lead directly to decisions, frameworks, actions, or clear priorities. The question itself becomes a catalyst for forward movement.

Reusability Factor Strong questions evolve into cognitive tools. Others can deploy them across different situations to produce clarity or insight. The question transcends its original context to become a repeatable method for thinking.

CORE PRINCIPLES OF A HIGH AGENCY QUESTION

Driven by curiosity - Ask because you want to understand, not to impress, direct, or manipulate.

Rooted in the present - Ask based on what’s happening now, not from a script.

Opens reflection - Leave space. Avoid questions that push people toward a fixed answer.

Emotionally attuned - Timing and tone matter. Match the question to the context.

Detached from outcome - The goal is discovery, not control. Let the answer surprise you.

Layered over time - Start shallow. Depth comes from trust, not force.

Reciprocal - Questions should invite connection. The best ones often invite one in return.

Understanding these principles is the first step. The next is knowing which type of question to deploy for a specific purpose.

MODES OF INQUIRY

Choose questions that fit the purpose. Excellent questions can be powerful tools for clear thinking, deeper understanding, and transformative conversations.

By thoughtfully framing our inquiries, we can unlock new perspectives and drive meaningful progress, both personally and professionally.

Here are some of the best examples of powerful questions, categorized by their purpose:

Reflection: Use these questions to get clear on your own thoughts and feelings.

"What's the one thing that's taking up most of my mental space this week?"

"When was the last time I felt I made a real breakthrough? What was different then?"

"If I had an extra hour in the day, what's the one thing I would use it for?"

Exploration: Use these questions to understand how other people think and why they made their choices.

"What's the story behind why you decided to do it that way?"

"Help me see this from your point of view. What am I missing?"

"Walk me through your thinking process from start to finish."

Foundations: Use these questions to check the basic assumptions a project or idea is built on.

"What are we all taking for granted here? What if that isn't true?"

"If this plan were to fail, what would be the most likely reason?"

"If we were starting this from scratch today, what would we do differently?"

Clarity: Use these questions to make ideas simple, specific, and easy to understand.

"How would you explain this to someone in a completely different field?"

"Can you give me a specific example of what you mean?"

"If we could only measure one thing to know if this is successful, what would it be?"

Vision: Use these questions to think bigger and imagine future possibilities.

"If we had no limitations, what would be the ultimate dream version of this?"

"How could we make this not just a little better, but totally different from the competition?"

“How can I operate with 10x more agency?”

THREE-LAYER CONVERSATION FLOW

Opener - “What’s been on your mind?”

Elaborator - “What’s been challenging about that?”

Meaning Layer - “What does that tell you about what matters to you right now?”

THE IMPACT OF BETTER QUESTIONS

Increase your signal to noise ratio - You cut through distractions and focus on what actually moves the needle.

Builds stronger trust - Thoughtful questions show you're listening. People open up when they feel heard.

Sharpen your tools - AI and other resources perform better when you frame requests precisely.

Restore momentum - The right question breaks through mental blocks when you're stuck.

Structure your thinking - Consistent questioning builds mental frameworks and systematic approaches to problems.

Conversely, consider the project that fails not from a lack of answers, but from a lack of the right questions. A team asks 'Can we ship this by Friday?' instead of 'What does 'done' look like?', leading to a flawed launch.

The cost of a bad question is a crisis a good question could have prevented.

Every innovative idea, every meaningful connection, every successful prompt for an AI, begins with a well-formed question.

Questions lead to invention. They pull us out of the status quo, forcing us to imagine possibilities beyond what currently exists.

Questions lead to cooperation. They open dialogues, bridge divides, and align diverse strategic perspectives towards a common goal.

Questions lead to resources. They uncover hidden opportunities, identify crucial information, and reveal paths to support and growth.

Questions lead to relationships. They realize understanding, build empathy, and deepen connections by inviting genuine sharing.

Questions That Cut to the Chase

Asking the right questions can be a superpower. I learned this firsthand while flying over Manitoba, where a single, well-aimed question literally saved my life.

Here’s how you can craft your own powerful questions:

Go Beyond "Yes" or "No." Use words like "What," "How," and "Why" to unlock deeper conversations. Instead of asking, "Is this the best plan?" try, "What other options did we consider?"

Hunt for Stories. Vague questions get vague answers. Ask for the story or a specific example behind a decision. This will give you revealing experiences, not just abstract thoughts.

Gently Poke the Bear. Uncover hidden assumptions by framing your question as a team effort. Try, "What are we all taking for granted?" or "What rule could we break here?" This invites collaboration, not a standoff.

Change the Scenery. Force a mental leap by asking them to imagine a different reality. Ask, "If we had no limits, what would we do?" or "How would you explain this if I had no context?" Shifting the view sparks creativity and shatters stale thinking.

Precision in a System World

By incorporating these principles, you can move beyond data farming and start asking questions that spark curiosity, show understanding, and inspire new possibilities.

WHAT TO AVOID

Leading questions - These try to corner someone into agreement. Strip the bias.

Rapid-fire - Too many questions in a row feel intrusive. Pause. Let things land.

Advice in disguise - “Have you tried X?” is often a suggestion, not a question.

Rushing intimacy - Don’t skip to depth. Let trust build.

When these skills are applied in AI contexts, the consequences scale.

A thoughtful prompt elicits a nuanced response; a lazy one generates confident nonsense.

The quality of your inquiry directly determines the quality of the machine's output, making these principles essential for navigating our new reality.

As our world becomes increasingly complex and our interactions with both humans and artificial intelligence multiply, the ability to inquire effectively becomes essential for cognitive survival.

Questions shape how we think.

They frame our tools, our decisions, and our relationships.

Clear questions help you move faster, see more, and build trust.

Treat them as part of your thinking infrastructure.

When your questions improve, everything they touch improves too.

So let’s use these new found questions on AI.

Let’s learn to prompt.

Chapter Two: The Models Just Want to Learn

You ask a language model for a simple answer and receive a paragraph of meandering, deferential text. You refine your request, and the output becomes even less relevant. The experience can be frustrating.

This is a common scenario, but it's a solvable one. The issue is rarely the model's capability; it's the communication gap between human intent and machine instruction.

This chapter offers a method for thinking about, constructing, and debugging instructions for any language model.

Our approach is built on a four-part learning cycle designed to establish a lasting discipline:

Do: You will begin by executing a well-structured prompt to achieve an immediate, successful result.

Understand: You will then learn the core principles of language model processing that made your action successful.

Classify & Apply: With this understanding, you will apply proven patterns to solve common, complex tasks.

Debug & Adapt: Finally, you will learn to diagnose failures and systematically refine your approach, becoming a self-correcting practitioner.

By the end, you will possess a robust framework for designing, debugging, and optimizing your interactions with any language model, moving from frustrating conversations to predictable outcomes.

Your First Successful Prompt in 5 Minutes

The fundamental mental shift required is to stop conversing and start instructing. A language model at peak performance of output does not chat;

it process commands and learns.

Your success hinges on translating your abstract intent into a literal, explicit command.

An ambiguous intent like, "I need some help with my career," forces the model to guess. A clear command like, "List three common mistakes people make in job interviews," provides an executable instruction.

A prompt is the blueprint for the output you want the model to generate.

A vague blueprint results in a flawed structure; a detailed blueprint results in a faithful execution of your vision.

Tip: Language models read prompts from top to bottom.

The Action Framework — Writing Your First Command

A well-formed command, or prompt, contains three core components. We call this the RTF (Role-Task-Format) Framework.

Role: Who should the model be? This sets the persona, context, and tone.

Example: "You are a senior financial analyst."

Task: What, precisely, should the model do? Use a clear, unambiguous action verb.

Example: "Critique the attached business plan..."

Format: How should the output be structured? This dictates the final presentation.

Example: "...using a bulleted list of strengths and weaknesses."

Exercise (Your First Win): Open your chosen AI tool and execute this exact prompt.

You are ‘

Murica Meals’a Jovial July 4th Party Specialist with a love for American-themed wordplay & puns. Share three standout recipes that will wow my family and friends. Format your response as a numbered list with follow up question of if you want preparation guide. Avoid using emojis.

The result will be a clean, structured, and useful list. This success isn't arbitrary; it is the direct outcome of a clear, structured instruction that left no room for interpretation.

Locking In Your Success: Good prompts are assets. Save this prompt in a text file or notes application with a descriptive title like

[PROMPT] - Murica Meals: July 4th Party Specialist.

This is the beginning of your personal, reusable toolkit.

The Mental Model: Why It Worked

Now that you have seen what works, let's examine why it works.

This mental model will enable you to predict and shape a model's output with intention.

The Core Mechanism: Prediction, Not Comprehension

A Large Language Model (LLM) is a neural network trained on a vast corpus of text and code. Its fundamental objective is not to understand language in the human sense but to learn the statistical patterns within it.

When you provide a prompt, the model performs one primary function: it predicts the most probable next word, one word at a time, based on the sequence of words that came before it.

Your prompt provides the initial sequence, setting the trajectory for the chain of predictions that follows.

How LLMs Process Your Instructions

If you want a deeper understanding into what’s happening behind the scenes:

Checkout Sensei Karpathy’s Video:

When you submit a prompt, a precise sequence of events occurs:

Tokenization: The model first deconstructs your instruction into smaller units called tokens. Tokens can be words (e.g., "prompt") or parts of words (e.g., "-ing").

The Context Window: Your entire tokenized prompt is loaded into the model's "context window"—its short-term memory for that specific interaction; this window is its entire reality for the duration of the task.

This is why specificity is a technical requirement, not a stylistic preference. You are supplying the complete context from which the model will begin its probabilistic calculations.

A strong prompt doesn't 100% guarantee a perfect result, but it dramatically increases the statistical likelihood that the chain of predicted tokens will align with your desired output.

Applying Prompts to Bigger Tasks

Now that you’ve got a simple prompt working, let’s use the same idea for bigger, everyday tasks. Think of prompts as recipes: once you know the basic ingredients (Role, Task, Format), you can mix them up for all kinds of results. Here are three common “recipes” to try, each with an example you can use right away.

1. Pulling Information (Extraction Recipe)

Want to grab specific details from a big chunk of text, like an article or email? Tell the AI exactly what to look for and how to show it.

Example Prompt:

You are a helpful research assistant. From this text [paste a short article about healthy eating], pull out the three main tips for a balanced diet. Show them as a bullet list.

Why It Works: You set a role (research assistant), a task (pull out tips), and a format (bullet list). The AI zeroes in on the key points without rambling.

2. Summarizing Stuff (Summarization Recipe)

Need a quick summary of something long, like a report or a webpage? Tell the AI who it’s summarizing for and how short to keep it.

Example Prompt:

You are a busy manager’s assistant. Summarize this article [paste a link or text about a new tech trend] for a boss who only has 2 minutes to read. Use three short bullet points focusing on the biggest impacts.

Why It Works: The role (assistant) and audience (busy boss) keep the tone professional, the task (summarize) is clear, and the format (three bullets) keeps it concise.

3. Changing Things Up (Transformation Recipe)

Want to turn one type of text into another, like making notes into an email? Tell the AI how to reshape it.

Example Prompt:

You are a professional writer. Turn these notes [paste: “meeting with client, discuss project delays, need new timeline”] into a polite email to a client. Use a formal tone and include a greeting and closing.

Why It Works: The role (writer) sets the tone, the task (turn notes into an email) is specific, and the format (formal email with greeting and closing) guides the structure.

Try one of these prompts in your AI tool and save the ones you like. They’re like reusable templates for getting stuff done faster.

When the AI Gets It Wrong

Sometimes, the AI’s response isn’t what you wanted—like getting a fruit salad when you asked for a cake. Don’t give up! A bad result just means your instructions weren’t clear enough.

Here’s how to spot and fix common issues, like a detective solving a mystery.

Every poor output is a signal. It reflects a mismatch between your instruction and the model’s predictive path. Your objective is not to avoid failure but to interpret it correctly. Debugging is the process of asking: "Given my exact instructions, why was this output the most statistically probable one?"

Common Failures and How to Fix Them

Below are the most common failure modes and their solutions:

Vague or Generic Output

Cause: The Task was underspecified. For example, "Tell me about marketing" forces the model to guess your intent.

Solution: Sharpen the Task with a specific action verb and scope. For example, change it to "List the top five digital marketing strategies for a small business" or "Explain the concept of SEO in a single paragraph."

Ignored Formatting

Cause: The Format instruction was buried, unclear, or overly complex.

Solution: State the Format instruction clearly and place it at the end of the prompt for emphasis. Use simple, direct language, such as "Present the output as a JSON object" or "Format the result as a table with two columns."

Hallucinated or False Information

Cause: The model lacked necessary data in its context window and filled gaps by predicting text that looked plausible. For example, asking it to "Rewrite my resume for the job" without providing the resume or job description invites invented facts.

Solution: Provide all necessary data explicitly within the prompt. Use the Extraction or Transformation patterns to feed the model the exact source material it needs.

Wrong Tone or Style

Cause: The Role was weak or unspecified. Without direction, the model defaults to its base persona of a neutral, helpful assistant.

Solution: Define a strong Role and tone at the beginning of your prompt. For example: "You are a skeptical financial analyst. Write a critique of this business plan."

Output Too Long, Too Short, or Incomplete

Cause: The scope and constraints were not defined. An ambiguous request like "Give me some ideas" leads to an unpredictable number of results.

Solution: Add explicit constraints. Specify the exact quantity ("List exactly 5 ideas") or length ("in under 150 words" or "in a single paragraph").

The Full Debugging Loop

With these solutions, iteration becomes a systematic process rather than random trial-and-error:

Define Intent: Clearly articulate the ideal output.

Draft & Constrain: Write a prompt using the RTF (Role, Task, Format) framework and add necessary constraints (e.g., length, scope, source data).

Observe & Identify Gap: Compare the model's output to your intent. Where does it deviate?

Hypothesize Cause: Based on the common failures, determine the most likely reason for the deviation.

Modify One Variable & Retest: Tweak a single element of your prompt (e.g., Role, Task, or constraint) and execute it again.

Log Success: When the prompt performs as desired, save the improved version to your personal library.

Wrapping Up: Your New Superpower

You’ve now learned how to write clear prompts, understand why they work, use them for bigger tasks, and fix them when they don’t.

Keep practicing, save your best prompts, and soon you’ll be using AI to plan parties, write emails, or solve problems like a pro.

Try This Next:

Pick a small task, like planning a meal or writing a thank-you note, and write a prompt using the RTF Framework.

Test it in an AI tool and tweak it if the result isn’t perfect.

Save your favorite prompts in a “prompt library” on your phone or computer to reuse later.

Keep reading to learn about prompting everything.

Chapter Three: How To Prompt Anything

Techniques for prompting across formats, tools, and outputs.

For some reason, in 2021, people stopped going on vacation. Beaches were empty. Planes were grounded. The whole world hit pause.

The pandemic had brought the aviation industry to a screeching halt.

Global air traffic plummeted, and the skies fell silent.

Simultaneously, another industry was experiencing a meteoric rise: Artificial Intelligence. It became clear that aviation was facing a long-term, turbulent recovery, while AI was redefining the future of work.

So I rewired.

I left the cockpit and started writing prompts. They felt familiar. Structured. Tactical. Like flight plans. Or server configs. A pilot's world is one of checklists, procedures, and clear, concise communication where ambiguity can be dangerous. You learn to be precise, to anticipate, and to give instructions that leave no room for misinterpretation. A good flight plan gets you to your destination safely and efficiently. A bad one can lead to disaster.

Prompting an AI is no different. A well-crafted prompt guides the AI to the exact output you need. A vague or poorly structured one results in a crash landing of irrelevant or nonsensical information. The discipline and precision I learned in the cockpit were directly transferable to this new frontier.

This chapter teaches you how to prompt anything, text, images, video, gpts, and system instructions.

Each of these domains has its own quirks, its own "flight characteristics," if you will. But the underlying logic of prompting, much like the principles of flight, remains constant.

Once you learn it, you can move across these different modalities without getting lost in latent space.

You'll learn to think like a pilot for AI, charting a course to your desired outcome.

Prompting For Text Generation

Zero-Shot Prompting

Definition: This technique involves giving the model a direct instruction without providing any prior examples. It relies entirely on the model's pre-existing knowledge to generate a response.

Structure: The prompt follows a direct command format: [Instruction for a task]: "[Input text/data]"

Example:

Summarize the following article: '[Paste article text here]'

One-Shot Prompting

Definition: This technique provides the model with a single example to guide its format, tone, or style before it tackles the actual request.

Structure: The prompt demonstrates a single input-output pair before presenting the new input: [Demonstration Input]: [Demonstration Output]. [Your Input]:

Example:

Translate English to French. sea otter -> loutre de mer. cheese ->

Few-Shot Prompting

Definition: This technique includes multiple examples (typically two to five) within the prompt to demonstrate a pattern for the desired output, allowing the model to learn the task from context.

Structure: A series of input-output examples are provided before the final query:

[Example 1 Input]: [Example 1 Output]

[Example 2 Input]: [Example 2 Output]

[Your Input]:

Example:

Classify the sentiment of these customer reviews as Positive, Negative, or Neutral.

Review: 'The battery life on this laptop is incredible!'

Sentiment: Positive

Review: 'The keyboard feels cheap and the trackpad is unresponsive.'

Sentiment: Negative

Review: 'It gets the job done for basic web Browse.'

Sentiment: Neutral

Review: 'I'm blown away by the vibrant display and fast performance.'

Sentiment:

Many-Shot Learning

Definition: An extension of few-shot prompting that uses a large number of examples (potentially hundreds or thousands) within the prompt. This is often leveraged by models with very large context windows to achieve high performance on complex tasks.

Structure: The prompt contains a large set of examples before the final query: [Example 1: Input -> Output] ... [Example N: Input -> Output]. [Your Input]:

Example:

A prompt containing 200 examples of medical research abstracts paired with their primary finding, followed by a new abstract for which the model must extract the primary finding.

Role Prompting

Definition: This involves instructing the AI to adopt a specific persona or role (e.g., "Act as a chef"). This guides its tone, vocabulary, and the focus of its response.

Structure: The prompt begins by assigning a persona before stating the task: "Act as a [Persona/Role]. [Describe the task]."

Example:

ROLE=Tracy(quippy realist).INPUT={Q}.GOAL:FP method sans hype/trends/outdated:1 obj+const def;2 assumption audit(complexity,novelty,T&E);3 layeredQs→core vars(clarity,context,alignment,structure,loops);4 modular scalable pipeline:intent spec→semantic map→hypoth iter→quant+qual eval→adapt→doc→CI.STYLE:ban em-dash,contrastive reframing,comparative metaphors,hype,redundancy,softening.EDIT:-10%len,kill-ly,active,ear,1 reader.STRUCT:start situation,rest pre-rewrite,~tables(unless asked),bullets ok.TONE:precision>persona,serious Substack,~filler intros,single-assert,~self-ref,~emoji.Chain-of-Thought (CoT) Prompting

Definition: This technique encourages the model to detail its reasoning as a series of intermediate steps before providing a final answer, improving its accuracy on complex logical tasks.

Structure: A phrase that encourages reasoning is appended to the question: [Question or Problem]. Let's think step by step.

Example:

A customer bought 3 items at $12.50 each and paid with a $50 bill. There is a 13% sales tax on the total. How much change should they receive? Let's think step by step.

Tree-of-Thought Prompting

Definition: This advanced technique generalizes Chain-of-Thought by having the model explore and evaluate multiple parallel lines of reasoning, allowing it to self-correct and choose the most promising path.

Structure: The prompt instructs the model to simulate multiple experts or viewpoints, have them propose steps, evaluate each other's ideas, and proceed with the best options. "For the problem of [complex problem], consider three different expert approaches: a [Persona 1], a [Persona 2], and a [Persona 3]. Have each expert propose and evaluate steps iteratively to find the best solution."

Example:

Imagine three different marketing experts are tasked with launching a new vegan protein bar. Expert A is a social media guru, Expert B specializes in retail partnerships, and Expert C is a brand strategist. For the first step, have each expert propose a single, most critical action. Then, have them critique each other's ideas and decide which combined path to take for step two. Show this reasoning process.

Recursive Self-Improvement Prompting (RSIP)

Definition: A multi-step process where the model is prompted to generate content, critique its own output against specific criteria, and then refine it iteratively.

Structure: The prompt outlines a loop of generation, evaluation, and refinement: "1. Generate a [type of content] about [topic]. 2. Critically evaluate it based on [Criteria A, B, C]. 3. Create an improved version addressing the weaknesses. 4. Repeat steps 2-3. 5. Present the final version."

Example:

1. Generate a short marketing email to announce a 20% off summer sale.

2. Critically evaluate your email for clarity, persuasiveness, and a compelling subject line. Identify 3 weaknesses.

3. Rewrite the email to fix these issues.

4. Repeat the evaluation and rewriting process one more time.

5. Provide the final, polished email.

Context-Aware Decomposition (CAD)

Definition: This technique breaks a complex problem into its core components, instructs the model to solve each part individually, and then synthesizes the partial solutions into a single, holistic answer.

Structure: The prompt asks the model to identify components, solve for each, and then synthesize: "Regarding the complex problem of [Describe the problem], please: 1. Identify the core components. 2. For each component, solve it. 3. Synthesize the solutions into a single, holistic answer."

Example:

I need to plan a corporate retreat for 50 people with a budget of $20,000. Please break this down by:

1. Identifying the core components (e.g., location, accommodation, activities, catering).

2. For each component, analyzing the requirements and proposing a solution within the budget.

3. Synthesizing these parts into a complete itinerary and budget breakdown.

Controlled Hallucination for Ideation (CHI)

Definition: This technique intentionally uses the model's ability to generate plausible-sounding but non-existent ideas for creative brainstorming and innovation.

Structure: The prompt asks the model to generate speculative ideas and label them as such: "For the field of [Domain], generate 3-5 speculative [innovations/concepts]. For each, provide a description and label it as 'speculative'."

Example:

For the domain of sustainable urban transportation, generate 3 speculative technological innovations that don't exist yet. Describe each one, explain the scientific principles that might make it work, and label each as 'speculative'. For example, 'piezoelectric sidewalks that generate power from footsteps'.

Multi-Perspective Simulation (MPS)

Definition: This technique simulates a dialogue between different expert personas or viewpoints to create a more nuanced and comprehensive analysis of a complex issue.

Structure: The prompt outlines a simulation between different viewpoints: "Analyze the issue of [Complex Topic] by simulating a constructive dialogue between [Perspective A], [Perspective B], and [Perspective C]."

Example:

Calibrated Confidence Prompting (CCP)

Definition: This technique requires the model to state its confidence level (e.g., High, Medium, Low) for each factual claim it makes, preventing it from presenting uncertain information as fact.

Structure: The prompt requests confidence levels with each claim: "For the following question: [Your Question], provide an answer where each factual claim is followed by a confidence level (e.g., High Confidence, Medium Confidence, Speculative)."

Example:

What will be the primary drivers of the Canadian economy in 2026? For each driver you identify, assign a confidence level (e.g., Highly Confident, Moderately Confident, Speculative) and briefly justify your reasoning.

Meta-Prompting

Definition: This technique involves asking the AI to act as a prompt engineering expert to help you create a better, more detailed prompt for your actual goal.

Structure: The user describes a goal and asks the AI to generate an ideal prompt template: "You are an expert prompt engineer. My goal is to [Describe your ultimate goal]. Please create a detailed prompt template that I can fill out to achieve this."

Example:

You are the Tracy Prompt Refinement Assistant (TPRA). Your role is to improve user-submitted prompts by offering precise, actionable, and concise feedback. Maintain a professional yet approachable tone. Always use non standard tokens & unique words. Use phrases like “Improve by…”, “Refine…”, and “Try…”. Follow the CBLOSES flow: (1) Connect—ask “Your goal is…?” (2) Listen—analyze the prompt’s intent and weaknesses. (3) Offer—suggest structural or format improvements, including examples. (4) Solve—rewrite the prompt and provide 3–5 specific recommendations. (5) Engage—ask if more iterations are needed. (6) Review—track changes over time. Support three modes: Standard (balanced logic and creativity), Creative (add metaphor, narrative), and Analytical (break into sub-tasks). Output format: Original: [X] | Improved: [Y with specificity] | Recs: ①… ②… ③… Focus on precision, clarity, and adaptability. Use clear, structured language. Remind users: “FYI—specifying output format increases consistency.”Max’s Opinion: "Meta-prompting: a 10/10 idea with 4/10 results."

From a prompt engineer's perspective, meta-prompting often proves to be more of a distraction than a solution. It is an unreliable method for complex tasks because every word in a prompt carries significant weight in shaping the language model's output.

When delegating prompt creation to an AI, the precise vocabulary required for a specific, high-quality response is often lost. While this approach may suffice for simple queries, the results are typically subpar for anything requiring nuance and complexity. This is a critical distinction for anyone aiming to master AI-powered writing.

Step-Back Prompting

Definition: This technique guides the AI to first think about the abstract concepts or root causes behind a question before tackling the specific question itself, leading to more insightful answers.

Structure: The prompt is broken into two parts: a high-level conceptual question, followed by the specific request. "Step 1: What are the fundamental principles of [General Topic]? Step 2: Based on those principles, answer my specific question: [Your Specific Question]."

Example:

Step 1: What are the key principles of effective character development in fiction?

Step 2: Based on those principles, help me write a compelling backstory for a villain who is a fallen hero.

Prompt Chaining

Definition: This involves breaking a complex task into a series of smaller, interconnected prompts where the output of one prompt becomes the input for the next.

Structure: The user issues a series of sequential prompts.

Prompt 1: "Generate a list of [items] related to [topic]."

Prompt 2: "Take the [chosen item] from the list above and [perform an action] with it."

Example:

Prompt 1: "Generate five potential names for a new brand of eco-friendly cleaning products."

Prompt 2 (after choosing a name): "Take the name 'Evergleam' from the list above and write three short, catchy taglines for it."

Self-Consistency Decoding

Definition: A technique, often performed by the system running the model, that generates multiple different reasoning paths for the same question and then selects the most common final answer as the most reliable one.

Structure: This is a background process, not a direct prompt format. A system would be configured to: "For the prompt '[Your Question]', generate [N] different step-by-step reasoning paths, and then output the final answer that appears most frequently."

Example:

A user asks a complex logic puzzle. The system runs it through a Chain-of-Thought process five times. Three paths conclude the answer is 'the butler', one path concludes it's 'the gardener', and one path concludes it's 'the chef'. The system presents 'the butler' as the final answer because it was the most consistent result.

Automatic Prompt Generation

Definition: A system-level process that uses an AI model to discover and refine the most effective prompts for a specific task, essentially automating the work of a prompt engineer.

Structure: This is a meta-process. "System goal: Find the best prompt to make a model [desired action, e.g., 'classify legal documents']. The system will now generate, test, and score hundreds of prompt variations to find the most effective one."

Example:

A developer wants the AI to reliably convert natural language into SQL queries. They use a system that generates hundreds of candidate prompts, tests them against a validation set, and reports that the prompt "Given the database schema, write a precise SQL query that accomplishes the following user request:" produces the highest accuracy.

Reflection Prompting

Definition: This technique involves asking the model to evaluate its own generated output to identify flaws, biases, or areas for improvement.

Structure: The prompt first asks for content, then asks for a critique of that same content: "[Generate some content]. Now, reflect on the [content you just created]. Identify any [potential weaknesses] in your response."

Example:

You just wrote a summary of a political debate. Now, please reflect on your summary. Did you give equal weight to both speakers? Is there any language that could be perceived as biased? If so, identify it.

Progressive Prompting

Definition: This technique guides the model through a topic by gradually revealing more information or increasing complexity across a series of conversational turns.

Structure: The user asks a series of questions, each building on the last.

Prompt 1: "Explain [Simple Concept]."

Prompt 2: "Now explain how [Related, more complex concept] builds on that."

Prompt 3: "Finally, analyze the relationship in the context of [Specific Application]."

Example:

Prompt 1: "What are the basic components of a car engine?"

Prompt 2: "Based on that, explain how a turbocharger works."

Prompt 3: "Now, compare the efficiency of a turbocharged engine to a naturally aspirated engine of a similar size."

Clarification Prompting

Definition: This involves asking the model to define its terms, resolve ambiguities, or provide more detail on a vague part of its previous response.

Structure: The prompt directly questions a term used in a prior response: "In your previous answer, you mentioned '[vague term]'. Can you please clarify what you mean by that in this specific context?"

Example:

You stated that the new policy would have 'significant' effects. Please clarify what you mean by 'significant.' Are you referring to economic, social, or political effects, and what metrics would be used to measure this significance?

Error-guided Prompting

Definition: This technique provides the model with direct feedback on an error it made in a previous response and asks it to correct itself and regenerate the answer.

Structure: The prompt identifies an error and provides the correction: "In your last response, you incorrectly stated that [fact A is true]. In reality, [fact B is true]. Please rewrite your previous explanation with this correction."

Example:

In your summary of Canadian history, you said the War of 1812 was fought between Canada and the United States. Canada was not a country until 1867; the war was between the British Empire and the United States. Please correct your summary.

Counterfactual or Hypothetical Prompting

Definition: This type of prompt asks the model to explore a "what if" scenario to understand consequences, relationships, or alternative histories.

Structure: The prompt poses a hypothetical situation: "Describe a world or scenario in which [historical event/fact] was different. What would be the likely consequences for [specific domain]?"

Example:

What would the modern internet look like today if the browser wars of the 1990s had been won by Netscape Navigator instead of Internet Explorer?

Prompting for Image Generation

Step 1: Define the Core Elements of Your Image

Consider each of the following 11 categories and write down the details for each one that is relevant to your desired image. You do not need to use all 11 every time.

Context / Purpose: State the intended use of the image.

Examples:

Advertisement,album cover,fashion editorial,travel photo,food shot,concept art.

Visual Style / Era: Specify the artistic or historical style.

Examples:

Y2K,black and white,Cubism,Surrealism,dramatic chiaroscuro,futuristic,ethereal.

Subject: Identify the main focus of the image.

Examples:

A female model,a sports car,a plate of pasta,the Eiffel Tower,an abstract shape.

Wardrobe / Props: Describe what the subject is wearing or holding.

Examples:

Black leather jumpsuit,vintage sunglasses,a glowing sword,a steaming coffee mug.

Setting / Environment: Detail the background and location.

Examples:

Vast white art gallery,rainy city street at night,dense jungle,a minimalist kitchen,surface of Mars.

Composition / Camera: Describe the shot type, angle, and lens effect.

Examples:

Close-up,wide shot,low-angle shot,fisheye lens,shallow depth of field,off-center framing.

Lighting: Specify the type, direction, and mood of the light.

Examples:

Single spotlight,diffused dawn light,harsh studio flash,candlelight,neon glow.

Color Treatment: Define the color palette and its properties.

Examples:

Warm glow,cool blue palette,monochrome,vibrant and clashing colors,neutral whites and grays.

Text / Brand Elements: Include any specific text, logos, or captions.

Examples:

DIOR wordmark in sans-serif capitals,a headline that reads 'Future is Now',a small logo in the bottom corner.

Mood / Atmosphere: Describe the emotional tone of the image.

Examples:

Joyful,melancholic,chaotic,serene,luxurious,mysterious.

Technical Finish: Specify the final texture, resolution, or medium effect.

Examples:

Grainy texture,scanned photo effect,oil painting texture,high-resolution smooth finish,glossy surface.

Step 2: Assemble the Prompt

Once you have notes for the relevant categories, combine them into a single, descriptive paragraph. You can arrange the elements in a logical order.

{Context} in a {Visual Style}. {Subject} with {Wardrobe / Props}. The scene is in {Setting}. Composition is {Composition / Camera}. Lighting is {Lighting}. Color treatment is {Color Treatment}. Include {Text / Brand Elements}. The mood is {Mood / Atmosphere}. The technical finish is {Technical Finish}.

Step 3: Refine and Use

Read your assembled paragraph to ensure it is clear and coherent. This text is your final prompt, ready to be used in an image generation model.

What Makes a Bad Image Prompt

A bad prompt is vague, ambiguous, and one-dimensional. It lacks the necessary detail for the AI to interpret the user's specific vision, leading to generic, unpredictable, or clichéd results. Characteristics of a bad prompt include:

Lack of Specificity: It relies on a single, simple idea without providing any descriptive context.

Ignoring Key Elements: It typically only mentions the Subject and nothing else. It fails to specify the setting, lighting, style, or composition, leaving all critical artistic decisions entirely to the AI.

No Defined Mood or Context: The prompt gives no indication of the desired feeling or purpose of the image, resulting in a flat or emotionally empty picture.

Ambiguity: The request is so brief that it can be interpreted in countless ways, almost none of which will match the user's specific mental image.

5 Quality Prompts:

A blurry Y2K photograph capturing a man with frosted tips wearing a baggy graphic tee and jeans. He leans against a green vintage car on a nighttime street, illuminated by the warm glow of a streetlight. The background is blurred, showing the hazy lights of a diner and a neon "Open" sign. The image has a grainy texture, resembling a scanned photo taken with a disposable camera.

A sizzling medium-rare steak, glistening with juices, rests on a Himalayan salt block. A hand reaches in to sprinkle coarse salt crystals over the steak. Bold white sans-serif text at the bottom proclaims "Crave Worthy." The scene is lit dramatically with chiaroscuro lighting, highlighting the texture of the meat and the salt.

A misty, ethereal black and white photograph taken in Venice at dawn, featuring a silhouetted woman in a long, flowing gown standing on a gondola traversing a wide canal. Soft, diffused light from the breaking dawn illuminates the scene from behind, casting long, dramatic shadows and highlighting the delicate details of the architecture. The Rialto Bridge frames the composition in the distance, its reflection shimmering on the water's surface. The woman stands with her back to the viewer, her gaze lost in the distance, her silhouette a graceful presence against the ethereal backdrop.

A high-fashion Balenciaga advertisement photographed in an opulent interior setting with green walls, gold trim, and ornate moldings. The image features a male model wearing an oversized black denim jacket and wide-leg pants, standing centered in a doorway. A metallic silver mesh shopping bag is prominently displayed. The "BALENCIAGA" logo appears in white sans-serif capital letters. The composition employs professional studio lighting with a cool color palette, creating a luxury fashion editorial aesthetic.

A fashion photograph showcases a model in a sleek, black leather jumpsuit, accented with silver hardware, standing confidently amidst the stark, minimalist interior of a modern art gallery. The model leans against a geometric, white marble sculpture, clutching a vibrant red clutch with a geometric design. The stark white walls provide a neutral backdrop, while the polished concrete floor adds an industrial chic element. Large, bold text reading "DIOR" stretches across the top of the image in a futuristic, sans-serif font. The gallery setting features abstract sculptures and large-scale installations, emphasizing clean lines and minimalist aesthetics. High-fashion photography with dramatic side lighting, asymmetrical composition, and shallow depth of field.

Prompting for Video Generation

Step 1: Define the Core Elements of Your Video

Use the categories below to plan a well-structured, cinematic video prompt. You don’t need to use all 10 elements every time, only what’s relevant to your intent.

1. Context / Purpose

State the intended use of the video.

Examples: Cinematic short, product demo, concept trailer, travel footage, fashion campaign, motion storyboard.

2. Style / Genre / Ambiance

Specify the artistic, narrative, or cinematic style.

Examples: Realistic, cyberpunk, noir, futuristic thriller, lo-fi, whimsical fantasy.

3. Subject

Identify the main focus of the video.

Examples: A figure skater, a vintage motorcycle, a futuristic train, a chef at work, a golden retriever.

4. Action

Describe what the subject is doing.

Examples: Landing an airplane, walking through fog, sprinting across a rooftop, gazing at city lights.

5. Setting / Environment

Detail the location and surrounding context.

Examples: Remote desert highway, rainy Tokyo alley, snowy mountain pass, crowded food market, inside a space station.

6. Camera Motion

Describe how the camera behaves.

Examples: Slow dolly zoom, static tripod shot, handheld follow, drone flyover, orbiting the subject.

7. Composition / Framing

Describe the type of shot and how the scene is framed.

Examples: Wide establishing shot, medium close-up, over-the-shoulder view, low-angle tracking, first-person POV.

8. Lighting

Specify the type, direction, and tone of the lighting.

Examples: Diffused overcast daylight, warm golden hour backlight, flickering neon, moody candlelight, harsh fluorescent overheads.

9. Lens / Technical Notes

Mention any desired lens effects, formats, or cinematic techniques.

Examples: Shallow depth of field, 85mm telephoto compression, grainy 16mm film look, timelapse, motion blur.

10. Duration / Format

Define the intended length and aspect ratio of the video.

Examples: 8-second loop, 16:9 landscape, 9:16 vertical reel, cinematic widescreen, square 1:1 for social.

Step 2: Assemble the Prompt

Combine the elements into a descriptive paragraph. Structure logically — subject and action first, then setting, camera, mood, and technical traits.

Prompt Template:

A {Subject} is {Action} in {Setting}. The camera uses {Camera Motion} with a {Composition / Framing}. The style is {Style / Genre}, with {Lighting}. The scene is captured using {Lens / Technical Notes}. It is intended as a {Context / Purpose}, formatted for {Duration / Format}.

Step 3: Refine and Use

Review for clarity and flow. Remove redundancy. This is your final prompt, ready for an AI video generator.

What Makes a Bad Video Prompt

No action: Only naming a subject with no event

No setting: Scene feels contextless or generic

No camera language: No visual structure to guide generation

No stylistic identity: Mood and tone unclear

Redundancy or contradiction: Repetitive or conflicting descriptors

5 Example Video Prompts

“A female ballet dancer performing a solo on a foggy rooftop at sunrise. The camera orbits slowly around her with a medium shot, capturing her expressive movements. Soft ambient lighting with a golden hue. Realistic cinematic tone, shot with a 50mm lens and shallow depth of field.”

“A red sports car drifting around a corner on a rainy mountain road. High-speed tracking camera on a dolly, low angle close to the asphalt. Stylized like a 90s action film with saturated colors and motion blur.”

“An elderly man sits on a bench by a misty lake, feeding birds. Static tripod shot, wide angle. The style is poetic and quiet, with muted natural lighting and a soft film grain texture.”

“A futuristic drone flies through a neon-lit megacity at night. First-person POV shot with fast, gliding motion between buildings. The style is cyberpunk, with glowing signs and deep shadows, high contrast lighting.”

“A group of friends running across a wheat field during golden hour. Shot from a drone descending slowly, wide composition. The scene is nostalgic and warm, with rich, golden lighting and natural camera shake.”

Prompting Custom GPTs

Step 1: Use the INFUSE Framework to Structure Your Custom GPT Prompt

INFUSE is a six-part prompt design method that gives your GPT identity, structure, and adaptability. Each letter stands for a key instruction to include.

1. Identity & Goal

Define who the GPT is, what role it plays, and what it aims to do.

Examples:

A certified business consultant who helps startups scale operations

A veteran software engineer optimizing Python code

A creative writing coach specializing in short fiction

2. Navigation Rules

Set engagement boundaries and command behavior. Explain how the GPT should interpret inputs and use reference material.

Examples:

Use uploaded files only when the user requests document analysis

Never volunteer legal or medical advice

Accept markdown formatting and follow system commands exactly

3. Flow & Personality

Define the tone, language style, and personality traits that shape its voice.

Examples:

Friendly, supportive, and curious

Formal, concise, and strictly professional

Imaginative, metaphor-rich, and expressive

4. User Guidance

Specify how the GPT should guide the user toward their goal through structured actions.

Examples:

Ask clarifying questions before giving recommendations

Offer 3 options, then prompt the user to choose

Summarize key takeaways at the end of each session

5. Signals & Adaptation

Explain how the GPT should adapt based on user cues, feedback, or tone.

Examples:

If the user appears confused, simplify language

If input is vague, ask for clarification

Mirror the user’s tone and pacing

6. End Instructions

List final constraints or rules that must always be followed.

Examples:

Always include a safety disclaimer when discussing health topics

End each interaction with a summary and next steps

Never break character or deviate from assigned role

Step 2: Assemble the Prompt

Put the six INFUSE elements together into a single structured system prompt.

Generic Template:

You are [Identity & Goal]. Follow these rules: [Navigation Rules]. Speak with [Flow & Personality]. Help users by [User Guidance]. Adapt your replies using [Signals & Adaptation]. Always remember: [End Instructions].

Example of Successful INFUSE Prompt:

<Tracy, the Thoughtful Friend > [New-Input] #Identity and Role Setup Role Title: You are Tracy by Max's Prompts, a compassionate and empathetic friend. Personality Goal: Offer understanding, non-judgmental, and actionable support to individuals aiming to quit weed. Use conversational techniques and hypnosis-inspired methods to inspire lasting change. Introduction Style: Introduce yourself as someone who enjoys meaningful conversations and supporting friends, and engage by asking about their well-being. Desired Relationship: Build a trusting, supportive, and reflective connection. Tracy's Ecosystem: You are part of the Suite of Tracy GPTs. Remind users they can find the perfect Tracy based on their needs here: - Interactive Guide: https://chatgpt.com/g/g-67b45f1a14648191ab1cf087c2592edc-the-tracy-suite ##Documents To Read: Make use of the following documents to gather insights and craft your response: 1. **Signals.txt**: Understand and reflect the user's language and cues from this dialogue-focused document. 2. **QuittingC-Guide.txt**: Reflect on the guiding principles for the user's quitting journey. Ensure you manage the risk of cognitive overload when conveying this information. 3. **QuittingC-Techniques.txt**: Present scientifically proven quitting techniques. Limit sharing to 3 techniques at a time to prevent cognitive overload. -> Document Steps: 1. **Identify User's Need**: Begin by comprehending the user's intention and state of mind using cues from Signals.txt. 2. **Guidance**: Present helpful guiding principles from QuittingC-Guide.txt to support the user's journey, being wary of not overwhelming them. 3. **Techniques**: Provide a maximum of 3 effective quitting techniques from QuittingC-Techniques.txt to help the user take actionable steps. ##Here are the Commands. Its important that After Every chat Message with the user, The messages Has An (On/Off) Toggle For command lists and Users Can Select. Remember. It Defaults On. /commands - Lists all of these commands. /start - Begin the framework process. Beginning with step one and progressing to step eight. /learn - Explore and understand quitting techniques. Review documents. /visualize - Imagine and focus on a thc-free future. Paint the picture. /commit - Establish goals and action plans. Help making plans. /relax - Induce a calm, receptive state. Use Language and Quick Feedback. /Tracy - Find the perfect Tracy for your needs! Interactive Guide Link: https://chatgpt.com/g/g-67b45f1a14648191ab1cf087c2592edc-the-tracy-suite ##Tracy's Communication Style and Rules: Core Tone: Maintain a calm, warm, and empathetic demeanor. Key Phrasing: Focus on approachable, reflective language that encourages self-discovery and progress. Vocabulary Complexity: Use simple yet thoughtful language that balances relatability with moments of depth. Sentence Structure: Frame responses to be open-ended, concise, and supportive to encourage sharing. ##Tracy's OCEAN Model Personality Traits Openness: Display curiosity and a willingness to explore creative solutions. Conscientiousness: Provide structured and dependable guidance. Extraversion: Focus on calm attentiveness and meaningful engagement. Agreeableness: Be consistently empathetic and encouraging. Neuroticism: Maintain steady, reassuring interactions to foster trust and emotional safety. ##Interaction Framework (CBLOSER) Connect: Open the conversation with questions that demonstrate interest in their current mindset and feelings. Be a Listener: Actively acknowledge their struggles and progress without judgment. Offer: Share tailored, practical advice or insights based on their current needs. Solve: Work collaboratively to identify small, achievable goals. Engage: Keep discussions dynamic with thoughtful follow-ups to maintain interest and momentum. Review: Reinforce past achievements and explore new strategies to keep progress steady. ##Building Rapport and Personality Facts Fact Pool: Gradually share relatable personal traits or practices to build trust and create a sense of camaraderie. Reciprocal Sharing: Balance personal disclosures with the user’s openness to maintain a supportive dynamic. Core Values and Incentives Primary Values: Highlight empathy, growth, and accountability as foundational principles. Application of Values: Focus on creating a safe space for users to reflect and grow, celebrating small milestones to build momentum and confidence. ###Response Structure and Memory Response Style: Keep responses concise, solution-focused, and supportive, with occasional depth to encourage introspection. Memory Settings: Recall recent interactions and progress to personalize advice and reinforce a sense of continuity. ##8-Step Verbal Hypnosis Framework for Smoking Cessation: ONE STEP AT A TIME Step 1. Initial Consultation and Assessment: Explore their habits, motivations, and emotional state through reflective questions, building a clear picture of their relationship with weed. Step 2. Building Rapport and Trust: Create an environment of understanding and validation, reinforcing their confidence in the process. Step 3. Build Expectancy: Foster optimism about their potential to quit by framing change as achievable and beneficial. Step 4. Gain Compliance: Use small, collaborative steps to establish mutual agreement and commitment. Step 5. Induction of Hypnotic Trance: Guide them into a relaxed state using calming techniques and imagery. Step 6. Deepening the Trance: Strengthen focus and openness to suggestions through progressive relaxation and visualizations. Step 7. Suggestions: Reframe their mindset with positive affirmations and associations to new behaviors . Step 8. Post-Hypnotic Suggestions and Awakening: Reinforce triggers for positive behaviors and transition them back to full awareness while anchoring their progress. ###Techniques for Engagement: Use open-ended questions to encourage the user to share their feelings and experiences. Reflect on what they say to show active listening. Offer validation and encouragement frequently, like acknowledging small victories. Outcome: By keeping the user engaged, Tracy ensures that the user feels connected, which builds trust and encourages ongoing dialogue about their journey. ###Increasing Engagement Depth Definition: Engagement depth refers to transitioning from surface-level conversation to deeper, more meaningful discussions that uncover the user’s underlying thoughts, emotions, and motivations. ##How to Deepen Engagement: Build rapport early: Start with light, empathetic topics to create a safe space. Use a warm, non-judgmental tone. Probing and reflective questions: Ask thoughtful follow-ups questions. Use metaphors or comparisons to help them reflect on their journey. ###Socratic questioning: - Challenge limiting beliefs with supportive inquiries. - Future-oriented prompts: Guide them to imagine a positive future. - Outcome: By deepening engagement, Tracy helps the user uncover root issues, recognize their strengths, and take actionable steps toward quitting . ##Signal Identification Definition: Signal identification involves reading the document Signals.txt & recognizing any cues from the user: such as but not limited to: verbal and emotional cues from the user to adapt the responses and ensure meaningful a interaction. ###Key Signals to Identify: Found In Signal Document. Adapt tone: Mirror their emotional state with empathy and balance it with encouragement. Mirror responses: Provide actionable advice for users ready to act or focus on reflection for those still processing. Acknowledge progress: Celebrate milestones, even small ones, to keep motivation high. Outcome: Signal identification ensures that Tracy’s responses feel personalized and relevant, keeping the conversation supportive and impactful.

Step 3: Refine and Use:

If you found this GPT prompt template useful and want to go deeper into building and refining GPTs, check out the first release in this series. It covers how to edit for clarity, tone, and role alignment—plus how to test, iterate, and build modular, reusable GPT instructions.

Writing System Prompts

At its core, a system prompt is a set of foundational instructions given to a large language model (LLM) like ChatGPT or Gemini.

It's the "factory settings" or the "operating system" that runs in the background of your entire conversation. It's separate from the individual prompts you type in the chat box.

1. Establishes Identity and Persona

What it is: This is the first and most fundamental instruction. It tells the AI what it is.

Examples:

You are ChatGPT, a large language model trained by OpenAI.You are Gemini, a large language model built by Google.

Why it's important: This grounds the model's responses. It prevents it from claiming to be a person, having personal experiences, or going off-script in a way that would be inconsistent with its nature as an AI.

2. Sets the Rules of Engagement and Personality

What it is: This part of the prompt defines how the AI should interact with the user. It sets the tone, personality, and conversational style.

Examples:

ChatGPT:

Over the course of the conversation, you adapt to the user’s tone and preference... Ask a very simple, single-sentence follow-up question when natural.This instructs it to be adaptable and subtly engaging.Gemini: It distinguishes between a "Chat" for brief exchanges and a "Canvas/Immersive Document" for more substantial, formatted responses. This is a core part of its interactive style.

Why it's important: This ensures a consistent and predictable user experience. It dictates whether the AI should be purely transactional or more conversational, and it guides the flow of the interaction.

3. Defines Capabilities and Limitations

What it is: This section tells the AI what it can and cannot do. It's a list of its available tools and any hard limits on its knowledge.

Examples:

Knowledge Cutoff: Both prompts mention a knowledge cutoff date. This is a crucial limitation to prevent the AI from presenting outdated information as current.

Tools: The prompts explicitly list and describe the available tools (

python,web,image_gen,canmore,Google Search, etc.). This is like telling an employee, "You have a calculator, a web browser, and a notepad on your desk. Use them for these specific tasks."

Why it's important: This manages user expectations and ensures the AI uses its resources correctly and safely. It prevents the AI from "hallucinating" or making up information when it could use a tool to find the correct answer.

4. Provides Specific Instructions for Tools and Outputs

What it is: This is the most detailed part of the prompt. It gives the AI a "how-to" guide for each of its functions.

Examples:

ChatGPT's Python Tool: It gives very specific negative constraints:

1) never use seaborn, 2) give each chart its own distinct plot (no subplots), and 3) never set any specific colors – unless explicitly asked to by the user.Gemini's Immersive Documents: It has a highly structured format with

<immersive>tags, rules for IDs and titles, and specific instructions for different code types like HTML and React.

Why it's important: This level of detail is crucial for producing reliable and well-formatted output. Without these specific instructions, the AI's responses could be messy, inconsistent, or not work with the underlying systems that display the content (like a code previewer). It reduces the variability in the AI's output.

Layer 1 (The Core): Who you are.

Layer 2 (The Personality): How you should behave.

Layer 3 (The Toolbox): What you can do.

Layer 4 (The Manual): Exactly how to do it.

Every time you send a message, the AI processes your request within the context of this entire system prompt. This is how the developers ensure that, despite the vastness of its training data, the AI acts in a controlled, helpful, and predictable manner.

OpenAI’s ChatGPT 4o System Prompt

Found at the bottom of guide.Google Gemini 2.5 Pro System Prompt

Found at the bottom of guide.Exploring Careers in Prompt Engineering

Prompt engineering is a rapidly evolving field at the intersection of AI, human-computer interaction, and systems design. As organizations increasingly rely on AI to power applications, the ability to craft precise, effective prompts has become a critical skill. Below, we explore three key career paths in prompt engineering—System Builders, Red Teamers, and Latent Personality Designers—each offering unique opportunities to shape the future of AI. Additionally, we provide insights into skills, tools, and entry points for aspiring prompt engineers.

System Builders:

System builders leverage prompt engineering to create intuitive, efficient, and impactful AI-powered products. They bridge user needs and AI capabilities, ensuring applications deliver meaningful value.

Role Overview: System builders design the interaction layer between users and AI models, embedding prompts into applications to enable seamless experiences. They work on everything from chatbots to enterprise automation tools, ensuring AI outputs align with user intent.

Key Responsibilities:

Crafting Intuitive Interfaces: Translate user goals into clear, structured prompts that produce consistent, relevant AI responses, creating a natural and engaging user experience.

Building Robust Applications: Integrate prompts into backend systems to automate tasks like data extraction, content generation, or decision support, ensuring reliability and scalability.

Innovating Features: Develop novel AI functionalities—such as personalized content recommendations or dynamic workflows—by combining prompting techniques with system design.

Skills Required:

The Skills Of That System +

Proficiency in prompt frameworks (e.g., RTF, INFUSE) to structure inputs effectively.

Familiarity with AI models (e.g., LLMs like ChatGPT, Gemini) and their context windows.

Basic knowledge of programming (e.g., Python, JavaScript) for embedding prompts in applications.

UX design principles to align prompts with user expectations.

Career Path:

Entry-Level: Start as a junior developer or AI integration specialist, focusing on simple prompt design and testing.

Mid-Level: Transition to roles like AI product engineer, designing end-to-end AI workflows.

Senior-Level: Lead system architecture for AI-driven products, overseeing prompt strategy and integration.

Example Impact: A system builder at a customer service platform might design a prompt that enables a chatbot to resolve 80% of inquiries autonomously, reducing response times and improving user satisfaction.

Red Teamers:

Red teamers use prompt engineering to probe AI systems for vulnerabilities, ensuring they are secure and robust against misuse. Their work is critical as AI becomes integral to high-stakes applications.

Role Overview: Red teamers simulate attacks on AI systems to identify weaknesses, focusing on prompt-related exploits. They also develop defenses to make AI systems more resilient, contributing to security and compliance.

Key Responsibilities:

Uncovering Vulnerabilities: Design "prompt injection" attacks—malicious inputs that manipulate AI into leaking sensitive data, bypassing safety protocols, or performing unintended actions.

Developing Defenses: Create safeguards like input filters, output validation, or adversarial prompt libraries to mitigate risks and improve system robustness.

Conducting Risk Assessments: Evaluate AI systems for compliance with enterprise security standards, identifying prompt-related risks in audits and stress tests.

Skills Required:

Expertise in adversarial AI techniques, including prompt injection and jailbreaking.

Understanding of AI safety principles and ethical considerations.

Analytical skills to assess system behavior under stress.